Rethinking Human-in-the-Loop AI

You’ve heard the term “human-in-the-loop” thrown around AI conversations for a while now. It’s become shorthand for keeping humans involved in AI systems, usually positioned as some kind of quality check or safety mechanism. Someone types a simple, cookie-cutter prompt into an LLM. The AI spits out an answer. A human reviews the output, gives it a thumbs up or thumbs down, and that’s that.

But here’s the thing: That’s not really what human-in-the-loop is about. Or at least, it shouldn’t be.

What if we stopped thinking about humans as passive reviewers sitting at the end of an AI pipeline, and instead recognized them as the critical infrastructure that makes the entire system work? Because that’s what’s actually happening in any effective AI implementation.

Let me explain what I mean.

The Three-Part Framework

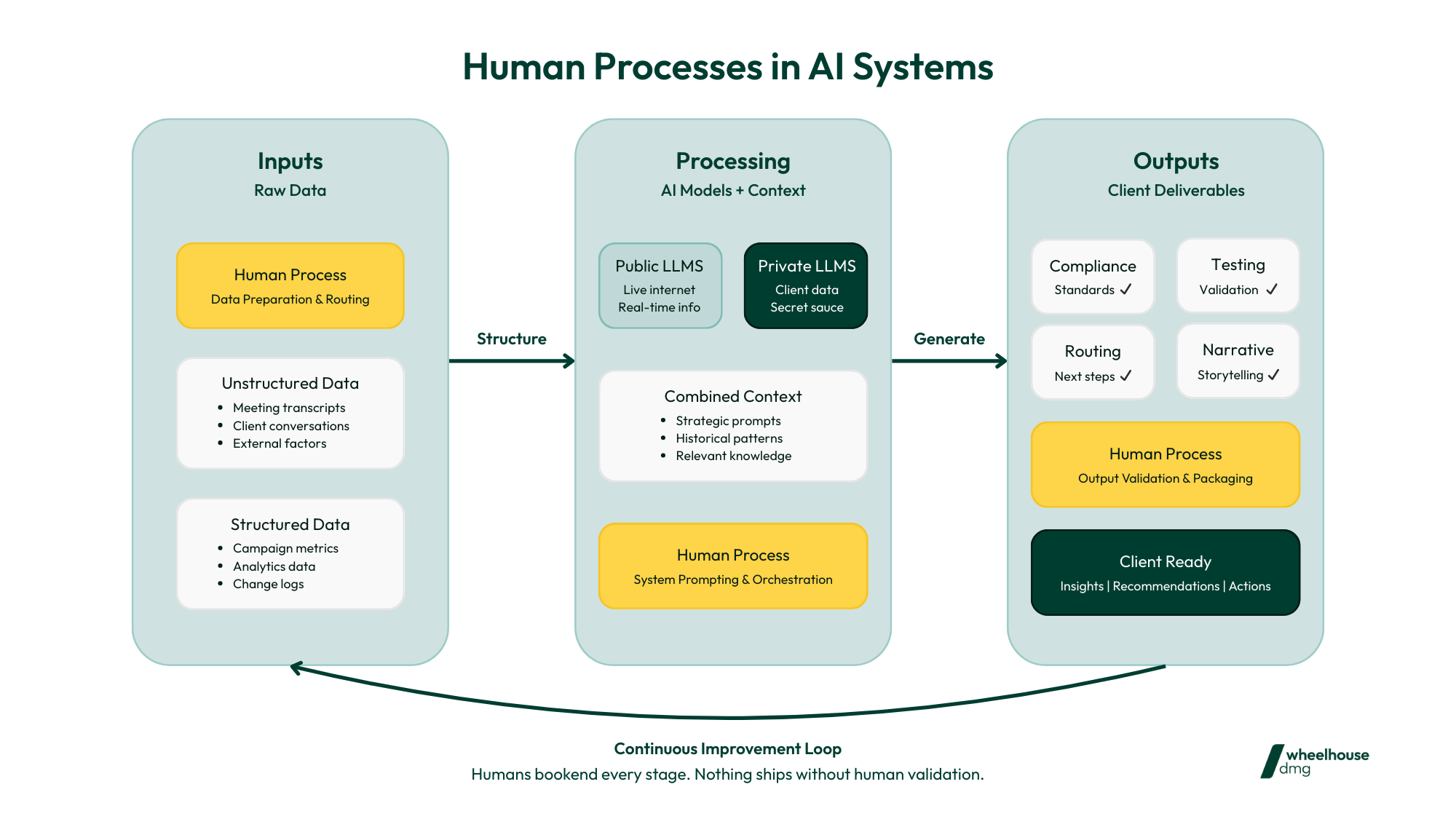

When we map out how AI actually functions in a marketing analytics context, or really any business context, you get three main components:

Inputs → Processing → Outputs

Simple enough, right? Raw data goes in, AI does its thing, and artifacts come out the other side.

But that’s not the full picture. It’s never that clean.

The reality is that humans are doing critical work in the spaces between these stages. We’re not just checking boxes at the end. We’re structuring unstructured data before it goes into the system. We’re routing information to the right processing channels. We’re validating outputs against compliance requirements. We’re testing whether the thing actually works before we ship it to a client.

These aren’t interruptions to the AI workflow, they’re essential components of it.

Breaking Down the Human Processes

Let’s get specific about what’s actually happening at each stage.

The Input Layer: Structuring the Chaos

We need a way to structure unstructured data. There’s going to be an increasing level of inputs on the left side of this kind of map, actions that we’ve taken, the change log essentially, and that’s only going to grow.

Think about everything that flows into a marketing analytics system:

- Raw campaign data from multiple platforms

- Conversations and meeting transcripts

- Change logs documenting every optimization

- External factors (weather, economic indicators, competitive moves)

- Structured data from databases

- Unstructured observations from your team

None of this is AI-ready out of the box. Someone has to make decisions about what gets fed into which system. Someone has to tag it, categorize it, and determine what’s actually relevant.

That’s human process number one: Data preparation and routing.

The Processing Layer: Widening the Choke Point

The other component of the tech stack is going to be widening the choke point between what we can prompt an LLM with and the data and what it can ultimately process.

Here’s where it gets interesting. We’re thinking about bifurcating processing, sending some data out to public LLMs, which are connected to the live internet and allowing them to ingest real-time information about how the world works. And then private LLMs, where we can securely analyze data like brainstorming conversations internally, client discussions that we have, recording transcripts, all of the sort of secret sauce learnings that we have.

This isn’t a technical decision. It’s a strategic one.

Someone has to decide:

- Which data goes to public vs. private models?

- What context does the AI need to answer this specific question?

- How do we combine outputs from multiple processing streams?

- When do we need AI reasoning vs. straight data retrieval?

That’s human process number two: System prompting and orchestration.

The Output Layer: Making It Client-Ready

Then we’re combining those two technical outputs into various artifacts. It could be instructions for the next step of AI processing, or it could be literally a client deliverable that we ship.

The challenge for 2026 is going to be expanding the bottleneck so that we can pass more data into the LLMs, both private and public. That’s the problem we’re solving for next year.

The problem we solve for in 2027 is the LLMs create a ton (and I mean a ton) of outputs. Which of those are actually client-worthy? And what kind of processes do we put in place so that humans can turn those into client-ready deliverables?

This is where most people think “human-in-the-loop” happens. But it’s not just quality control. It’s also:

- Compliance — Does this meet regulatory standards?

- Routing — Where does this output need to go next?

- Testing — Does this actually solve the problem it’s supposed to solve?

- Narrative building — How do we turn data insights into client-ready stories?

That’s human process number three: Output validation and packaging.

Why This Matters for AI Strategy

When you start thinking about AI systems this way, a few things become clear:

First, your technology choices need to be modular.

This is modular technology that we’re renting and modular human frameworks that we’re applying to the system. When any component piece becomes outdated or it’s deprecated in favor of some huge model that emerges, we create places and areas where we can swap new technology into the various tasks.

You can’t hitch your wagon to one startup’s AI tech. You need three different ways to solve each problem so that you always have a backup plan.

Second, you need new kinds of roles.

We need people to vet both the inputs and the outputs of the technology at any given time, and to research whether or not there are better component parts to swap in.

Traditional analyst roles are transforming. We’ve already automated metrics in dashboards and PowerPoints. What needs automation next is insights: the manual time spent comparing period over period, looking for trends, going back to the change log to figure out what drove performance.

But even when insights are automated, strategists are still needed to validate and interpret the outputs from AI. There’s still a level of strategic guidance that we’ll have to provide for any level of recommendations that it may make for us.

Third, your investment is in people, not just technology.

The cost is going to be in investing in people. The cost is us coming together and working through these kinds of things together, maybe adding new roles.

AI platforms are getting cheaper. The expensive part is building the human infrastructure that makes those platforms actually useful.

What This Looks Like in Practice

Here’s a concrete example from our world:

We’re building a system where we can capture client questions during calls, analyze them to identify patterns, and then proactively prepare analysis based on those questions before the next meeting. Then we generate narrative reporting outputs that go beyond just data and insights to include insight stories and recommendations.

Sounds like an AI pipeline, right? But look at the human processes involved. Someone has to:

- Structure the meeting transcripts (input preparation)

- Decide which questions warrant proactive analysis (routing)

- Validate that the analysis actually answers the question (quality control)

- Craft the narrative that makes the insight actionable (packaging)

- Determine whether this is ready to send to the client (compliance)

At each stage, humans are doing critical work that determines whether the whole system succeeds or fails.

The Analyst Role in 2027

What does the analyst position look like in 2027? It’s going to be really different. I don’t know exactly what it looks like, but it’s going to change.

Platforms are automating campaign builds and creative builds. We’re automating insight generation. We’re building systems that can test and iterate faster than ever before.

But someone still needs to:

- Guide the AI toward the right questions

- Validate that recommendations will actually resonate with customers

- Interpret whether the patterns AI finds are meaningful or just noise

- Make strategic bets about where to focus resources

- Decide when to override the algorithm

The analyst of 2027 isn’t going to spend time pulling numbers into spreadsheets. They’re going to spend time making judgment calls about what those numbers actually mean.

That’s still a human job. It’s just a very different one.

The Bottom Line

Human-in-the-loop isn’t about adding a human checkpoint at the end of an AI process.

It’s about recognizing that effective AI systems have always required humans to do critical work at every stage, structuring inputs, orchestrating processing, and validating outputs.

The difference now is that we need to be intentional about designing those human processes instead of treating them as afterthoughts.

We know AI is really good at pattern matching, probability calculation, and generating outputs at scale. What it can’t do is make strategic judgment calls about what patterns matter, which probabilities are worth acting on, or which outputs are actually ready to ship.

That’s still an analyst’s job. And honestly, it’s a better job than the one before: less time in spreadsheets, more time thinking strategically about what actually drives results.

So the next time someone talks about “human-in-the-loop AI,” ask them a simple question:

What are the actual human processes that make this system work?

Because if they can’t answer that, they probably don’t understand their AI system as well as they think they do.

If you’d like to talk more about building human processes into your AI strategy, that’s exactly the kind of thing we’re working through at Wheelhouse. Reach out to compare notes.

Want more strategic insights like this?

Our newsletter explores the strategies, technologies, and approaches that are actually moving the needle for privacy-first brands. No fluff, just actionable insights and real-world lessons from the front lines of performance marketing.